I have been working with Active Directory a while (since 2003) and have seen quite many environments during that time. I have turned ADDS upside down once in a while in disaster recovery and deep dive troubleshooting cases. What I haven’t seen ever, is AD database which has growth in approximately one year from 2GB to 12,2GB. From my point of view it’s quite huge database size, biggest I have seen before this in production has been approximately 4GB. I have read stories about 180GB ADDS DB sizes but that’s totally different story.

The Problem

So database size is 12,2GB, what does it matter nowadays? First two things which comes to my mind are CritSit and capacity. If you face critical situation and you have AD DS database size of 12GB you have to definitely rethink recovery strategy. Performing domain controller promotion with replication takes approximately 5-6 hours in this environment.

Second thing is capacity of domain controllers comparing smaller environments. During measurement and calculation I ended to these numbers regarding memory consumption:

Calculation showed 15,6GB totally needed memory and measurement (which lasted two days) confirmed that it should be enough. I added just be sure 24GB memory and 4GB page file (sligthly over commitment here). Worth to mention is that during promotion of new Domain Controller memory consumption raised up to 75-80% before it dropped down to 35%. This happened after domain partition was replicated and promo process continued to application partitions (domaindns & forestdns).

Another “problem” was curiosity, what in the earth caused huge increase in the database size. My own tools was used, had to pick up the phone and call Microsoft support to get help for analyzing database.

Analyzing Database – Tools

Basic troubleshooting tools and event logs didn’t show any errors and we started to dig down to database level with

- Dumping the AD database

- Esentutl which is used for managing JET database (Microsoft Joint Engine Technology)

- DBAnalyzer.exe tool (Honestly, never used before)

Dumping AD database can be done with old school too LDP.exe. Good instructions how to do it can be found from here. It dumps the AD database to one file which can be imported to for example to excel. This is an excellent way to find out how data is actually located in AD. One of my favorite blogs, deep dive to what’s really the Active Directory. Unfortunately it didn’t gave us any hint how data has allocated storage.

Esenutl was used to get more accurate details how data is structured to different tables. Commands to run to get more details from JET database

- Stop ntds (ADDS) service and run below command within the elevated command prompt.

- esentutl /ms ntds.dit /f#legacy >summary.txt

- esentutl /ms ntds.dit /f#all /v /csv >all.csv

- Start ntds (ADDS) service

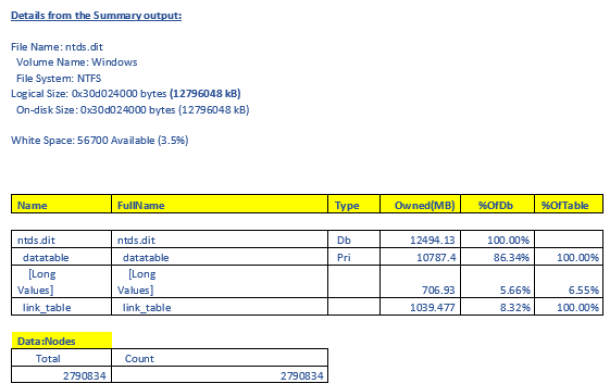

Results showed that datatable, which consists of pure AD Data (users , OU , Groups , attributes and values) is what contributing to maximum size on NTDS.dit. Also found that there is approximately 3,5% of white space in database.

Despite the results I wasn’t satisfied and needed to go more deeper and jumped to the next tool, DBAnalyzer.

DBAnalyzer found out interesting stuff. As I said, I haven’t ever used it before but it analyzed database per attribute and measured their sizes which gave us a hint about the sizes of partitions and attributes. Worth to mention is that DBAnalyzer is just giving a ballpark figure about DB size, makes LDAP query to AD and does not estimate the accurate size if objects.

- Live objects from all partitions are approximately 2,8GB

- Deleted 35MB

- Recycled 288MB

- Total objects 2,8GB

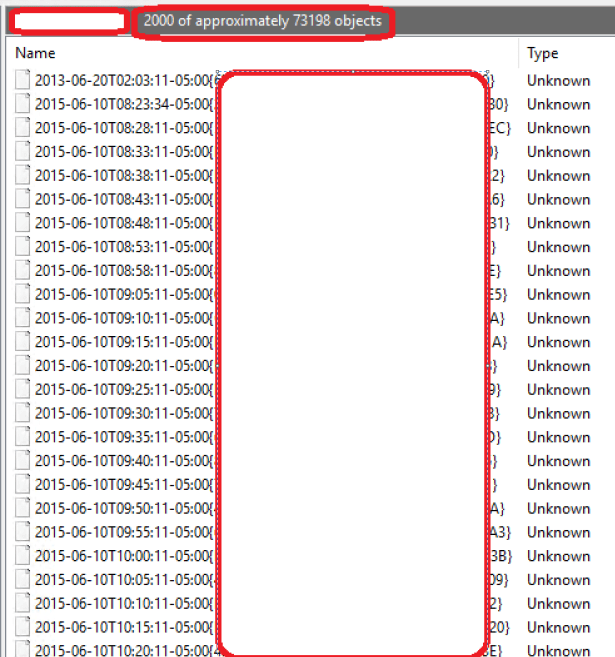

What we can see also is that quite many msFVE-RecoveryInformation (which contains full Volume Encryption recovery password with its associated GUID) has been created in last months, actually trend has been that thousands of keys has been created in every month.

Bitlocker attributes contains several GB of data, in my opinion that’s way too much.

Even Bitlocker didn’t explain the whole database size it was worth to check underneath the hood a little closer. I ran following commands to get all Bitlocker keys from environment

- Export the msFVE-RecoveryInformation objects

- ldifde /d DC=contoso,DC=com /r (objectclass= msFVE-RecoveryInformation) /l objectclass /f .\bitlockers.txt

2. Strip out the attributes and sort the objects by hosting computer name always starts at column 72):

- findstr dn: bitlockers.txt |sort /+72 > bitlocker-obj.txt

I found out that some of the computers had a lot, I mean a lot of Bitlocker recovery keys as seen pictures below. Pictures taken from computer object (show as containers view). Definitely found reason for high value of storage used for Bitlocker attributes.

Remediation

Regarding findings naturally the goal was to decrease Active Directory database size. We decided to go forward with following actions:

- Correct process and mechanism for creating Bitlocker keys. Current situation is that keys are new are written all the time and old ones are not overwritten.

- Maintaining of keys is done via scripts currently and we are planning to take Microsoft BitLocker Administration and Monitoring (MBAM) in to use

- Remove stale user accounts (approx. 4000)

- Remove stale computer accounts (approx. 7000)

- Perform offline defragmentation to ADDS database. This will remove the white space and fragments the DB. Size of the database will decrease 1,5GB with offline defragmentation

When all of the remediation tasks has been done I’ll write part 2, if I’m still having a chance to work in this environment:)

Curious where you got the DBAnalyzer tool, and how you ran it!

Drop me an email (samilamppu@hotmail.com) and I’ll share it to you with instructions.

Thanks,

Sami

I’m looking for dbanalyzer tool. where can i find this?

please send email to me and I’ll share the tool for you.

-Sami

Sami, I sent you an email so you can Share the DBanalyze tool. I hope you can reply. Thank you.

Hi Fabricio, I will send it to you later on today.

Thanks,

Sami

Thank you Sami

Hi Sami,

can you please send me the link to the dbanalyzer tool as well?

Thanks,

Markus

Hi,

Yes I can. Please send email to me samilamppu@hotmail.com and I will send the tool with instructions to you.

-Sami

Me too, please. I dont find the email from you. I will send you an email.

It’s deal😊

Thanks for the info. Per other requests I sent you an email to get the DBAnalyzer tool and instructions.

Currently troubleshooting the size of a NTDS file. Looking at the data from running esentutl /ms ntds.dit /f#all /v /csv >all.csv the Link_Table is about 20 times the size of the DataTable. In my reading it should be much smaller than the data table. Have you seen this issue before or know what might be causing it?

Thanks!

Hello Brian! I sent an email to you. If you have any questions related the tool/issue just send email to me.

-Sam

Hi Sami, where did you get the DBanalyzer tool from and can I get a copy…

Hi Adam,

Drop me a line (samilamppu@hotmail.com) and I will share the tool for you with the instructions. It’s initially from MS support.

Hi for anyone interested DBAnalyzer is part of Lync Reskit. Sitll available form MS.

It would be cool to find here a correct syntax to bind with AD.

Thanks for sharing Mike!

Awesome article but can you share detials how were you able to connect to .dit? dbanlyzer /sqlserver:dc.acme.com ? or MS has a secrets beyond dbanlayze /? =)

Hello Sami,

This is such a great article!

I am currently working for a client who has exactly the same issue.

According to DB Analyzer, the total space consumed by all the objects (including live, deleted and recycled) is approximately 10GB.

However, ntds.dit is close to 32GB.

We are working on removing the keys using scripts. Total keys to be removed are close to 8 million and we have removed 2 million yet and according to the garbage collection events, just 200MB has become free which is not a good sign.

I am thinking that even after removal of all 8 million keys, we are going to end up with a considerable size of NTDS.dit.

Did you ever get a chance to write the part 2, please let me know.

I will be eagerly waiting for your response.

Thanks,

Rishabh

Hello Rishabh,

Thank you for reading! In your case, a huge number of BitLocker keys you have;) It would be interesting to hear what will be the DB size after you have removed all the unnecessary keys from your environment.

Unfortunately, I changed employer and haven’t got a chance to finalize the work I started.

Hello Rishab,

if you could help me with the DBAnalyzer tool and how did you make it work.

Thanks

Piyush

Hi, did you already send email to me? If you did I will share the tool for you. It’s also included in Lync reskit tools.

Hello Sami,

How can i get this DBAnalyzer tool, i am experiencing the same issue in my organisation. please suggest soon.

Hi Sami,

I have sent an email to you. Although I have downloaded the said tool from Skype Reskit, not sure as to how to Bind it with AD database. Do provide the instructions as you have sent it out to others.

Thanks

Piyush Gupta